The deluge of Earth Observation (EO) images counting hundreds of Terabytes per day needs to be converted into meaningful information, largely impacting the socio-economic-environmental triangle. Multispectral and microwave EO sensors are unceasingly streaming millions of samples per second, which must be analysed to extract semantics or physical parameters for understanding Earth spatio-temporal patterns and phenomena. Typical EO multispectral sensors acquires images in several spectral channels, covering the visible and infrared spectra, or the Synthetic Aperture Radar (SAR) images are represented as complex values representing modulations in amplitude, frequency, phase or polarization of the collected radar echoes. An important particularity of EO images should be considered, is their “instrument” nature, i.e. in addition to the spatial information, they are sensing physical parameters, and they are mainly sensing outside of the visual spectrum.

Machine and deep learning methods are mainly used for image classification or objects segmentation, usually applied to one single image at a time and associated to the visual perception. The tutorial presents specific solutions for the EO sensory and semantic gap.

Therefore, aiming to enlarge the concepts of image processing introducing models and methods for physically meaningful features extraction to enable high accuracy characterization of any structure in large volumes of EO images. The focus is on advancement of paradigms for stochastic and Bayesian inference, evolving to the methods of deep learning and generative adversarial networks. Since the data sets are organic part of the learning process, the EO dataset biases pose new challenges. The tutorial answers open questions on relative data biases, cross-dataset generalization, for very specific EO cases as multispectral or SAR observation with a large variability of imaging parameters and semantic content. The challenge of very limited and high complexity training data sets it is addressed introducing paradigms to minimize the amount of computation and to learn jointly with the amount of known available data using cognitive primitives for grasping the behavior of the observed objects or processes. Since sensors are the source of the big data, the tutorial is further analyzing the methods of computational imaging to optimize the EO information sensing. The presentation covers the analysis of the most advanced methods in synthetic aperture, coded aperture, compressive sensing, data compression, ghost imaging, and also the basics of quantum sensing. The overall theoretical trends are summarized in the perspective of practical applications.

Mihai Datcu received the M.S. and Ph.D. degrees in Electronics and Telecommunications from the University Politechnica Bucharest UPB, Romania, in 1978 and 1986. In 1999 he received the title Habilitation a diriger des recherches in Computer Science from University Louis Pasteur, Strasbourg, France. Since 1981 he has been Professor with the Department of Applied Electronics and Information Engineering, Faculty of Electronics, Telecommunications and Information Technology (ETTI), UPB, and since 1993, he has been a scientist with the German Aerospace Center (DLR), Oberpfaffenhofen. Currently he is Senior Scientist and Data Intelligence and Knowledge Discovery research group leader with the Remote Sensing Technology Institute (IMF) of DLR, Oberpfaffenhofen, director of the Research Center for Spatial Information CEOSoaceTech at UPB and delegate with DLR-ONERA Joint Virtual Center for AI in Aerospace. His interests are in Bayesian inference, information and complexity theory, machine and deep learning, AI, computational sensing in optical and radar remote sensing. He is involved in research in Quantum Machine Learning, and Quantum radar signal processing. He is member of the European Quantum Industry Consortium, and visiting professor with the ESA Φ - Lab involved in elaboration of a Quantum vision for EO. He is involved in collaboration with NASA on applications of Quantum Annealing. He has held Visiting Professor appointments with the University of Oviedo, Spain, the University Louis Pasteur and the International Space University, both in Strasbourg, France, University of Siegen, Germany, University of Innsbruck, Austria, University of Alcala, Spain, University Tor Vergata, Rome, Italy, Universidad Pontificia de Salamanca, campus de Madrid, Spain, University of Camerino, Italy, the Swiss Center for Scientific Computing (CSCS), Manno, Switzerland, From 1992 to 2002 he had a longer Invited Professor assignment with the Swiss Federal Institute of Technology, ETH Zurich. Since 2001 he has initiated and leaded the Competence Centre on Information Extraction and Image Understanding for Earth Observation, at ParisTech, Paris Institute of Technology, Telecom Paris, a collaboration of DLR with the French Space Agency (CNES). He has been Professor holder of the DLR-CNES Chair at ParisTech. He initiated the European frame of projects for Image Information Mining (IIM) and is involved in research programs for information extraction, data mining and knowledge discovery and data understanding with the European Space Agency (ESA), NASA, and in a variety of national and European projects. He is a member of the European Big Data from Space Coordination Group (BiDS). He received in 2006 the Best Paper Award, IEEE Geoscience and Remote Sensing Society Prize, in 2008 the National Order of Merit with the rank of Knight, for outstanding international research results, awarded by the President of Romania, and in 1987 the Romanian Academy Prize Traian Vuia for the development of SAADI image analysis system and activity in image processing. In 2017 he was awarded an International Chair of Excellence Blaise Pascal for recognition in the field of Data Science, with the Centre d’Etudes et de Recherche en Informatique (CEDRIC) at the Conservatoire National des Arts et Métiers (CNAM) in Paris. He is IEEE Fellow.

Over the course of the last 25 years, media streaming has seen a dramatic growth transformation from a pioneering concept to a mainstream technology used to deliver all sorts of media today. In this tutorial, we will survey the history of the Internet streaming, from first innovations to modern architectures and technologies that it relies upon. We will then review the relevant standards as well as their recent extensions to low-latency live streaming and also streaming beyond 2D (omnidirectional and 6DoF formats). We will also review some recent innovations and improvements in technologies supporting streaming: content and context-aware encoding, improvements in player algorithms, use of ML and AI-based approaches toward analysis and prediction of QoE achievable by streaming systems. At the end, we will also talk about some unsolved problems and technical challenges that still exist in the design of streaming systems.

Positron Emission Tomography (PET) is a molecular medical imaging modality which is commonly used for neurodegenerative diseases diagnosis. It can reflect neural activity via measuring glucose uptake at resting-state. Computer-Aided Diagnosis (CAD), based on medical image analysis could help quantitative evaluation of brain diseases such as Alzheimer's Disease (AD). CAD systems are generally based on several steps: data acquisition,pre-processing, feature extraction and classification. After describing the image database on which evaluations have been done, the preprocessing steps including spatial registration and image normalization to allow inter-subject comparison is presented. Dimension reduction to overcome the curse of dimensionality is applied to brain images using an anatomical atlas which helps to map brain images into Volume Of Interests (ROIs). Ranking the effectiveness of brain Volume Of Interest (VOI) to separate Healthy or Normal control (HC or NC) from AD brains PET images is presented. Mild Cognitive Impairment (MCI) is a prodromal stage of AD. Nevertheless, only part of MCI subjects could progress to AD. Identifying those who will develop a dementia of AD type among MCI patients is another predicting task of importance. Different features including statistical, graph or connectivity-based features are computed on these VOIs. The multi-level feature representation considers not only volume properties, but also the connectivity between any pair of volumes and an overall connectivity between one volume and the other volumes. Feature selection is another steps that allows VOIs ranking. The approach developed is compared to well-known feature selection then Top-ranked VOIs are input into a classifier. A supervised approach is used for this purpose. Recently, deep learning techniques has given an impressive performance in recognition and classification tasks. Therefore, a great number of researches turned to use neural network to address CAD. To this end, a Multi-view Separable Pyramid Network is proposed, in which representations are learned from axial, coronal and sagittal views of PET scans so as to offer complementary information and then combined to make a decision jointly. Different from the widely and naturally used 3D convolution operations for 3D images, the proposed architecture is deployed with separable convolution from slice-wise to spatial-wise successively, which can retain the spatial information and reduce training parameters compared to 2D and 3D networks, respectively. The different developed methods are evaluated on a public database and then compared to known selection feature and classification methods. These new approaches outperformed classification results in the case of a two-group separation.

Mouloud Adel is a Professor in Computer Science and Electrical Engineering at Aix-Marseille University, Marseille, France. His research areas concern signal and image processing and machine learning applied to biomedical and industrial images. He received his Engineering degree in electrical engineering from the Ecole Nationale Supérieure d’Electricité et de Mécanique (ENSEM) of Nancy, France, as well as his Master’s degree in electronic and feedback control systems in 1990. He obtained his PhD degree from the Institut National Polytechnique de Lorraine (INPL) of Nancy in 1994 in image processing. He has been the supervisor of more than ten PhD students. He has been involved in many international research programs (Germany, Algeria, United Kingdom) and he is a member of the editorial board of Journal of Biomedical Engineering and Informatics. He has been an invited speaker at Polytechnic Institute of Technology of Algiers (Algeria) and at Parma University (Italy), and chaired a special session "Statistical Image Analysis for computer-aided detection and diagnosis on Medical and Biological Images" in IPTA 2014 (IEEE International Conference on Image Processing Theory and Application, Paris). He has been the co-organizer of various international conferences and workshops. He also served as a regular reviewer, associate and guest editor for a number of journals and conferences. He is the author or co-author of 60 journal and conference papers. He has been one of the invited speakers of the IEEE International Conference on Image Processing Theory Tools and Application in November 2020 at Paris, France.

Xiaoxi Pan received the Ph.D. degree from Ecole Centrale Marseille, France in 2019. Before that, she obtained M.E. and B.S. degrees from Beihang University and Northwestern Polytechnical University, China in 2013 and 2016, respectively. She is currently a postdoctoral training fellow at the Institute of Cancer Research, UK. Her research interests lie in medical image processing and analysis.

The huge success of deep-learning–based approaches in computer vision has inspired research in learned solutions to classic image/video processing problems, such as restoration, super-resolution (SR), and compression. Many researchers reported results that exceed the state-of-the-art in image/video restoration and SR by a wide margin via supervised learning using pairs of ground-truth (GT) images/video and degraded or low-resolution (LR) images/video generated by known degradation models, such as bicubic downsampling. Recent work in learned image compression focused on jointly learning variational auto-encoders with models of the latent variable probability distributions. Adding side information (“hyper priors”) to allow the probability models themselves to adapt locally resulted in a learned models that exceeded the performance of traditional image encoders (e.g., BPG). Given these successes, learning-based methods have emerged as a promising nonlinear signal-processing framework and the state of the art in image/video restoration, SR, and compression is getting redefined. This tutorial presents the state-of-the-art fundamental deep network architectures and training methods used for image/video restoration and compression, including advances in modeling temporal correlations and motion in video. We also cover several key current research and future research issues including: generalization of image restoration and SR results to real-world problems, efficency of learned models, and perceptual optimization of the results.

PART 1: Learned Image/Video Restoration and Superresolution

We discuss different image restoration problems, the state-of-the-art network architectures and learned models for image/video restoration and super-resolution (SR), their ability to generalize to real-world applications, and evaluation of the results of these models, including:

PART 2: Learned Image/Video Compression

End-to-end optimized learned models may replace transforms such as discrete cosine transform and wavelet transform with variational auto-encoders that learn image-dependent transforms or generative codecs that are trained to synthesize decoded images without artefacts. Learned models have also been employed as tools, such as pre- and/or post-processing filters. Recent research indicates that learned transforms models can be highly effective for uni-directional (low-delay) and bi-directional video compression. We cover:

A. Murat Tekalp received Ph.D. degree in Electrical, Computer, and Systems Engineering from Rensselaer Polytechnic Institute (RPI), Troy, New York, in 1984, He has been with Eastman Kodak Company, Rochester, New York, from 1984 to 1987, and with the University of Rochester, Rochester, New York, from 1987 to 2005, where he was promoted to Distinguished University Professor. He is currently Professor at Koc University, Istanbul, Turkey. He served as Dean of Engineering between 2010-2013. His research interests are in digital image and video processing, including video compression and streaming, video networking, and image/video processing using deep learning.

He is a Fellow off IEEE and a member of Academia Europaea. He served as an Associate Editor for the IEEE Trans. on Signal Processing (1990-1992) and IEEE Trans. on Image Processing (1994-1996). He chaired the IEEE Signal Processing Society Technical Committee on Image and Multidimensional Signal Processing (Jan. 1996 - Dec. 1997). He was the Editor-in-Chief of the EURASIP journal Signal Processing: Image Communication published by Elsevier between 1999-2010. He was appointed as the General Chair of IEEE Int. Conf. on Image Processing (ICIP) at Rochester, NY in 2002, and as Technical Program Co-Chair of ICIP 2020, which was held as an on-line event. He was on the Editorial Board of the IEEE Signal Processing Magazine (2007-2010). He has served in the ERC Advanced Grants Evaluation Panel (2009-2015) and in the Editorial Board of Proceedings of the IEEE (2014-2020). He is now serving in the Editorial Board of Wiley-IEEE Press (since 2018). Dr. Tekalp has authored the Prentice Hall book Digital Video Processing (1995), a completely rewritten second edition of which is published in 2015.

The framework entitled General Adaptive Neighborhood Image Processing and Analysis (GANIPA) has been introduced in order to propose an original local image representation and mathematical structure for adaptive non-linear processing and analysis of gray-tone images and further extended to color and multispectral images. The central idea is based on the key notion of adaptivity which is simultaneously associated with the analyzing scales, the spatial structures and the intensity values of the image to be addressed. Several adaptive image operators are then defined in the context of image filtering, image segmentation, image measurements and image registration by the use of convolution analysis, order filtering, mathematical morphology, integral geometrical or similarity measures. Such operators are no longer spatially invariant, but vary over the whole image with General Adaptive Neighborhoods (GANs) as adaptive operational windows, taking intrinsically into account the local image features.

The first part of this tutorial will be focused on the context and the definitions and properties of the GANs. Once these adaptive neighborhoods are defined, it is possible to build different operators for image processing (filtering such as enhancement/restoration, segmentation, registration...) but also for image analysis providing tools for local image measurements (integral geometry, shape diagrams). The second part of my talk will be focused on these new operators and will be illustrated on real applications in different areas (biomedical, material, process engineering, remote sensing...). Finally, some conclusions and prospects will be given. In conclusion, the GANIPA framework allows efficient adaptive image operators to be built (using local adaptive operational windows) and opens new pathways that promise large prospects for nonlinear image processing and analysis.

Johan Debayle received his M.Sc., Ph.D. and Habilitation degrees in the field of image processing and analysis, in 2002, 2005 and 2012 respectively. Currently, he is a Full Professor at the Ecole Nationale Supérieure des Mines de Saint-Etienne (MINES Saint-Etienne) in France, within the SPIN Center and the LGF Laboratory, UMR CNRS 5307, where he leads both the PMMG and PMDM departments, respectively, which are interested in image processing and analysis of granular media. He is also the Deputy Director of the MORPHEA CNRS GDR 2021 Research Group. He is the Head of the Master of Science in Mathematical Imaging and Spatial Pattern Analysis (MISPA) at the ENSM-SE. He is the General Chair of IEEE ISIVC’2020, ECSIA’2021 and ICIVP’2021 international conferences. He is Associate Editor of 3 International Journals (PAA, JEI, IAS).

His research interests include image processing and analysis, mathematical morphology, pattern recognition and stochastic geometry. He published more than 140 international papers in book chapters, international journals and conference proceedings. He has been Invited Professor in different universities: ITWM Fraunhofer / University of Kaisersleutern (Germany), University Gadjah Mada, Yogyakarta (Indonesia), University of Puebla (Mexico) and is currently cosupervisor of several PhD students with these partners. He served as Program committee member in several IEEE international conferences (ICIP, CISP, ISM, EFTA, WOSSPA). He has been invited as keynote speaker in several international conferences (IEEE ICIP, SPIE EI, ICMV, DCS, CIMA, ICST, ISIVC, ICPRS). He is a reviewer for several IEEE international journals (TIP, SMC-A, SMC-B, JBHI, SJ, JSTARS).

He is Senior Member of the Institute of Electrical and Electronics Engineers (IEEE) within the Signal Processing Society (SPS), and Vice-Chair Membership of the IEEE France Section. More information is given on Prof. Johan Debayle’s homepage: http://www.emse.fr/~debayle/

Security and monitoring systems are increasingly being deployed to control and prevent abnormal events especially in situational awareness applications before irreversible damage occurs including national security breach, deaths, and infrastructure destructions, to name a few. The security and protection of airports, national infrastructures, and private or public services require cost-effective solutions for use both in low and high-risk environments. Such solutions would lead to increased security levels thus resulting in higher societal and economic impact. With the continuous rapid development of high-performance computing tools, technologies, and the availability of cost-effective distributed sensor nodes, video-surveillance is currently experiencing a rather spectacular turning point. Particularly, the data-driven computationally intensive artificial intelligence-based approaches, in particular, deep learning models not only revolutionized the video surveillance domain but many other fields like medical imaging and other vision-related problems have leverage over the progress in the field. One of the most challenging problems in video-surveillance is how to control the quality of the acquired signal. Indeed, video-based surveillance systems often suffer from poor-quality video and especially in an uncontrolled environment which may induce several types of distortions such as noise, uneven illumination, blur, saturation, and atmospheric degradations. This may strongly affect the performance of high-level tasks such as visual tracking, abnormal event detection or more generally scene understanding and interpretation. This tutorial provides an overview of the most recent trends and future research in video surveillance. We will focus on the most important tasks and difficulties related to the quality of the acquired and transmitted images/videos in uncontrolled environments and high-level tasks such as visual tracking and abnormal event detection. For visual tracking, we will discuss the difference between online and offline tracking. The Tracking-by-detection paradigm for multi-target tracking will be scrutinized. Especially, the building blocks of tracking like appearance model, motion model, detection model, association model, and mutual interaction model, will be examined and the associated research challenges will be highlighted. For the visual scene understanding, both atomistic and holistic crowd motion analysis will be presented. The progress and research trends in abnormal event detection, crowd behavior classification, crowd counting, and congestion analysis will be reviewed. In a nutshell, we will discuss some challenges, solutions, results, and promising avenues to move towards completely intelligent end-to-end systems.

Azeddine BEGHDADI is Full Professor at the University of Paris 13 (Institut Galilée) Sorbonne Paris Cite since 2000 the director of L2TI laboratory (from 2010 to 2016). He received Master in Optics and Signal Processing from University Paris-Saclay in 1983 and the PhD in Physics (Specialism: Optics and Signal Processing) from Sorbonne University in June 1986. Dr. Beghdadi worked at different places during his PhD thesis, including "Laboratoire d’Optique des Solides" (Sorbonne University) and "Groupe d’Analyse d’Images Biomédicales" (CNAM Paris). From 1987 to 1989, he has been appointed "Assistant Associé" (Lecturer) at University Sorbonne Paris Nord. During the period 1987-1998, he was with LPMTM CNRS Laboratory working on Scanning Electron Microscope (SEM) materials image analysis. He published over than 300 international refereed scientific papers. He is a founding member of the L2TI laboratory. His research interests include image quality enhancement and assessment, image and video compression, bio-inspired models for image analysis and processing, and physics-based image analysis. Dr. Beghdadi is the founder and Co-Chair of the European Workshop on Visual Information Processing (EUVIP). Dr Beghdadi is a member of the editorial board of "Signal Processing: Image Communication" journal, Elsevier, EURASIP Journal on Image and Video Processing, the Journal of Electronic Imaging, and Mathematical Problems in Sciences Journal. Dr Beghdadi is a Senior member of IEEE, VIP-TAC-EURASIP elected member and elected member of the IEEE-MMSP.

Personal Webpage: https://www-l2ti.univ-paris13.fr/~beghdadi/

Mohib Ullah received the bachelor’s degree in electronic and computer engineering from the Politecnico di Torino, Italy, in 2012, the master’s degree in telecommunication engineering from the University of Trento, Italy, in 2015, and the Ph.D. degree in computer science from the Norwegian University of Science and Technology (NTNU), Norway, in 2019. He is currently a Postdoctoral Research Fellow with NTNU, where he is involved in several industrial projects related to video surveillance. He teaches courses on machine learning and computer vision. His research interests include medical imaging, video surveillance, especially, crowd analysis, object segmentation, behavior classification, and tracking. In these research areas, he published more than 30 peer-reviewed journals, conferences, and workshop articles. He served as a Program Committee Member for the International Workshop on Computer Vision in Sports (CVsports) at CVPR 2018, 2019, and 2020. He also served as a Chair for the Technical Program at the European Workshop on Visual Information Processing. He is the Reviewer of well-reputed conferences and journals (Neurocomputing (Elsevier), Neural Computing and Applications (Elsevier), Multimedia Tools and Applications (Spring), IEEE ACCESS, the Journal of Imaging, Sensors, IEEE CVPRw, IEEE ICIP, and IEEE AVSS).

Personal Webpage: https://www.ntnu.edu/employees/mohib.ullah

Person re-identification (Re-ID) is the problem of retrieving all the images of a query person from a large gallery, where query and gallery images are captured by distinctively different cameras with non-overlapping views. The problem is challenging, and demand for efficient solutions in security and surveillance applications are steadily increasing. Thus, person Re-ID problem has increasingly attracted attention from the image processing and computer vision community in recent years. This tutorial will provide a basic understanding of person Re-ID as well as comprehensive analysis of state-of-the-art solutions.

In the first part of the tutorial, a comprehensive overview of the problem will be given for two major categories: closed-world and open-world settings. We will discuss different aspects of both categories by considering their application areas, basic building blocks, datasets, comparative analysis of state-of-the art solutions on public datasets. Major aspects such as feature representation learning, deep metric learning, ranking organization, datasets, evaluation protocols in closed-world settings, and cross-modality, limited labels, noisy annotation, and efficiency issues of open-set Re-ID will be covered in addition to the analysis of state-of-the-art solutions. In the second and third part of the tutorial, we will focus on details of one sample state-of-the-art solution in each category for a deeper understanding of the building blocks of successful systems. In the second and third part, Person Re-Id in visible domain using human semantic parsing, and the Visible-Infrared cross modality person Re-Id will be presented as detailed examples of closed- and open-world Re-IDs, respectively.

In the last part of the tutorial, current challenges and future work in person Re-Id will be considered. This part discusses needs for a long-term, scalable, efficient, dynamic, robust to cloth changes person Re-ID solutions.

Muhittin Gökmen received his BS degree in electrical engineering from the Istanbul Technical University, Turkey, in 1982. He received the MS and PhD degrees in electrical engineering from the University of Pittsburgh in 1986 and 1990, respectively. In 1991, he served as a post-doctoral research associate at the same university. In 1992, he joined the Computer Engineering Department at the Istanbul Technical University as an assistant professor, and became associate professor, and professor there in 1993 and 1999, respectively. He served as dean of Faculty of Electrical and Electronics Engineering from 2001-2004, and the chair of Computer Engineering Department at ITU. He joined MEF University in 2014 as the Department Chair for the Department of Computer Engineering. He was appointed the Director of the Graduate School of Science and Engineering of this university in 2015.

Dr. Gökmen’s research interests include image processing and computer vision, specifically early-vision problems, face recognition, person Re-Identification, traffic video analysis. He was the founding director of the Computer Vision and Image Processing (CVIP) Laboratory, the Multimedia Center, and the Software Development Center at the Istanbul Technical University. He was the chair of the Third Workshop on Signal Processing and Applications (SIU’95) organized in 1995, a member of the organizing committee of the 10th International Symposium on Computer and Information Sciences (ISCIS X) in 1995, co-chair of the Workshop on Signal Processing and Applications (SIU’04) held in 2004, and the general chair of the 23rd International Symposium on Computer and Information Sciences (ISCIS’08) held in 2008. Dr. Gökmen was the Student Relations Chairman of IEEE Turkey Section between 1994-1996, and the IEEE Student Branch Counselor at the Istanbul Technical University. He is the founder of a company, Divit-Digital Video and Image Technologies Inc., which is the first innovation company founded by a faculty member at ITU Technopark. He serves as IEEE Student Branch Counselor at MEF University.

In the recent years, screen content videos including computer generated text, graphics and animations, have drawn more attention than ever, as many related applications become very popular. However, conventional video codecs are typically designed to handle the camera-captured, natural video. Screen content video on the other hand, exhibits distinct signal characteristics and varied levels of the human’s visual sensitivity to distortions. To address the need for efficient coding of such contents, a number of coding tools have been specifically developed and achieved great advances in terms of coding efficiency.

The importance of screen content applications is well addressed by the fact that all of the recently developed video coding standards have included screen content coding (SCC) features. Nevertheless, the inclusion considerations of SCC tools in these standards are quite different. Each standard typically adopts only a subset of the known tools. Further, for one particular coding tool, when adopted in more than one standard, its technical features may various quite a lot from one standard to another.

All these caused confusions to both researchers who want to further explore SCC on top of the state-of-the-art and engineers who want to choose a codec particularly suitable for their targeted products. Information of SCC technologies in general and specific tool designs in these standards are of great interest. This tutorial provides an overview and comparative study of screen content coding (SCC) technologies across a few recently developed video coding standards, namely HEVC SCC, VVC, AVS3, AV1 and EVC. In addition to the technical introduction, discussions on the performance and design/implementation complication aspects of the SCC tools are followed up, aiming to provide a detailed and comprehensive report. The overall performances of these standards are also compared in the context of SCC. The SCC tools in discussion are listed as follows:

Screen content coding specific technologies:

Screen content coding related technologies:

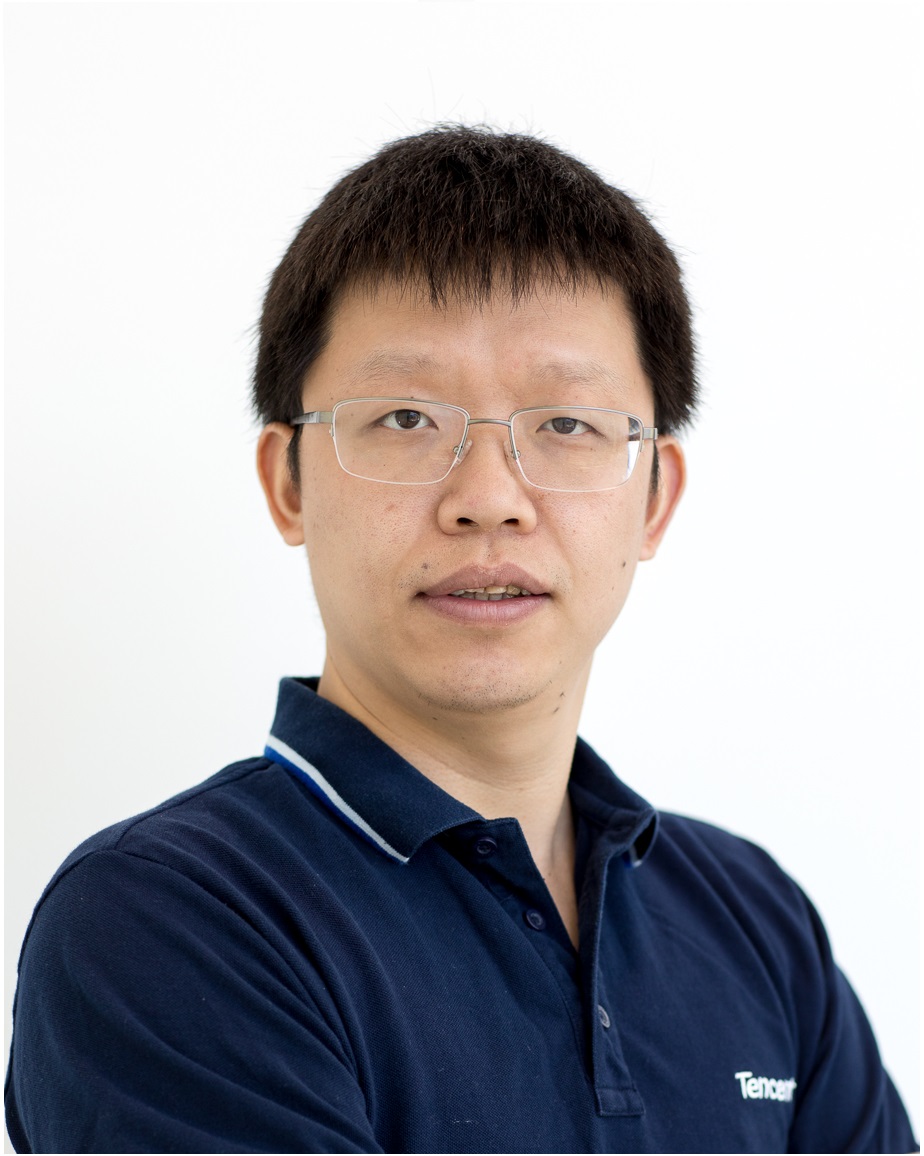

Xiaozhong Xu has been a Principal Researcher and Senior Manager of Multimedia Standards at Tencent Media Lab, Palo Alto, CA, USA, since 2017. He was with MediaTek USA Inc., San Jose, CA, USA as a Senior Staff Engineer and Department Manager of Multimedia Technology Development, from 2013 to 2017. Prior to that, he worked for Zenverge (acquired by NXP in 2014), a semiconductor company focusing on multi-channel video transcoding ASIC design, from 2011 to 2013. He also held technical positions at Thomson Corporate Research (now Technicolor) and Mitsubishi Electric Research Laboratories. His research interest lies in the general area of multimedia, including video and image coding, processing and transmission. He has been an active participant in video coding standardization activities for over fifteen years. He has successfully contributed to various standards including H.264/AVC and its extensions, AVS1 and AVS3 (China), HEVC and its extensions, MPEG-5 EVC and the most recent H.266/VVC standard. He served as a core experiment (CE) coordinator and a key technical contributor for screen content coding developments in various video coding standards (HEVC, VVC, EVC and AVS3). Xiaozhong Xu received the B.S. and Ph.D. degrees from Tsinghua University, Beijing China in electronics engineering, and the MS degree from Polytechnic school of engineering, New York University, NY, USA, in electrical and computer engineering.

Shan Liu received the B.Eng. degree in Electronic Engineering from Tsinghua University, the M.S. and Ph.D. degrees in Electrical Engineering from the University of Southern California, respectively. She is currently a Tencent Distinguished Scientist and General Manager of Tencent Media Lab. She was formerly Director of Media Technology Division at MediaTek USA. She was also formerly with MERL and Sony, etc. Dr. Liu has been actively contributing to international standards during the last 10+ years and has more than 100 technical proposals adopted into various standards, such as VVC, HEVC, OMAF, DASH, MMT and PCC, etc. She has chaired and co-chaired numerous AHG through standard development and served co-Editor of H.265/HEVC SCC and H.266/VVC. She also directly contributed to and led development effort of technologies and products which have served several hundred million users. Dr. Liu holds more than 200 granted US and global patents and has published more than 100 journal and conference papers. She was in the committee of Industrial Relationship of IEEE Signal Processing Society (2014-2015). She served the VP of Industrial Relations and Development of Asia-Pacific Signal and Information Processing Association (2016-2017) and was named APSIPA Industrial Distinguished Leader in 2018. She is on the Editorial Board of IEEE Transactions on Circuits and Systems for Video Technology (2018-present) and received the Best AE Award in 2019 and 2020, respectively. She also served or serves as a guest editor for a few T-CSVT special issues and special sections. She has been serving Vice Chair of IEEE Data Compression Standards Committee since 2019. Her research interests include audio-visual, high volume, immersive and emerging media compression, intelligence, transport and systems.

The worldwide flourishing of the Internet of Things (IoT) in the past decade has enabled numerous new applications through the internetworking of a wide variety of devices and sensors. More recently, visual sensors have seen their considerable booming in IoT systems because they are capable of providing richer and more versatile information. Internetworking of large-scale visual sensors has been named Internet of Video Things (IoVT). IoVT has its own unique characteristics in terms of sensing, transmission, storage, and analysis, which are fundamentally different from the conventional IoT. These new characteristics of IoVT are expected to impose significant challenges to existing technical infrastructures. In this tutorial, an overview of recent advances in various fronts of IoVT will be introduced and a broad range of technological and system challenges will be addressed. Several emerging IoVT applications will be discussed to illustrate the potentials of IoVT in a broad range of practical scenarios.

Furthermore, with the accelerated proliferation of the IoVT in myriad forms, the acquired visual data are featured with prohibitively high data volume while low value intensity. These unprecedented challenges motivate the radical shift from the traditional visual information representation techniques (e.g., video coding) to the biologically inspired information processing paradigm (e.g., digital retina coding). In this tutorial, we will further introduce the methodologies that could facilitate the efficient, flexible and reliable representation of the IoVT data based on three streams: texture, feature and model. With the ascending popularity of machine vision technologies that enable better perception of the IoVT data, we believe that the need for IoVT that realizes such efficient representation will become more pronounced. We hope that with this introduction we could stimulate fruitful results and inspire future research to this fast-evolving field.

Outline and Key ReferencesPart I:

Part II:

Chang Wen Chen, an Empire Innovation Professor of Computer Science and Engineering at the University at Buffalo, State University of New York. He has been the Editor-in-Chief for IEEE Trans. Multimedia from 2014 to 2016. He has also served as the Editor-in-Chief for IEEE Trans. Circuits and Systems for Video Technology from 2006 to 2009. His research areas include multimedia systems, multimedia communication, Internet of Video Things, visual surveillance, smart visual sensors, smart city, and tele-immersive system. He has received numerous awards, including 9 best paper awards, Alexander von Humboldt Research Award, and UIUC ECE Distinguished Alumni Award. He is an IEEE Fellow and an SPIE Fellow.

Shiqi Wang is an Assistant Professor of Computer Science at the City University of Hong Kong. He holds a Ph.D. (2014) from the Peking University and a B.Sc. in Computer Science from the Harbin Institute of Technology (2008). He has proposed over 40 technical proposals to ISO/MPEG, ITU-T, and AVS standards, and authored/coauthored more than 200 refereed journal/conference articles. His research interests include video compression, quality assessment, and analysis.

Due to recent advances in visual capture technology, point clouds have been recognized as a crucial data structure for 3D content. In particular, PC's are essential for numerous applications such as virtual and mixed reality, 3D content production, sensing for autonomous vehicle navigation, architecture and cultural heritage, etc. Efficient point cloud processing and coding techniques are fundamental to enable the practical use of PC, making it a hot topic both in academic research and in industry. Point cloud coding is also currently the subject of on-going standardization activities in MPEG, and a first version of the standards G-PCC and V-PCC has been recently released. In this context, learning-based methods for point cloud processing and coding have attracted a great deal of attention in the past couple of years, thanks to their incredible effectiveness in learning good 3D features and the competitive performance with more traditional PC coding techniques.

This tutorial intends to provide an overview of recent advances in learning-based processing and coding of 3D point clouds (PCs). More specifically, the course will introduce and review the most popular point cloud representations, including “native” representations (unordered point sets), and “non-native” PC representations such as octrees, voxels and 2D projections. We intend to cover the state of the art processing and coding approaches for the different representations, discuss the relevant data-driven approaches, review some of the standardization efforts, and explore new research directions.

Giuseppe Valenzise is a CNRS researcher (chargé de recherches) at the Université Paris-Saclay, CNRS, CentraleSupélec, Laboratoire des Signaux et Systèmes (L2S, UMR 8506), in the Telecom and Networking hub. Giuseppe completed a master degree and a Ph.D. in Information Technology at the Politecnico di Milano, Italy, in 2007 and 2011, respectively. In 2012, he joined the French Centre National de la Recherche Scientifique (CNRS) as a permanent researcher, first at the Laboratoire Traitement et Communication de l’Information (LTCI) Telecom Paristech, and from 2016 at L2S. He got the French « Habilitation à diriger des recherches » (HDR) from Université Paris-Sud in 2019. His research interests span different fields of image and video processing, including traditional and learning-based image and video compression, light fields and point cloud coding, image/video quality assessment, high dynamic range imaging and applications of machine learning to image and video analysis. He is co-author of more than 70 research publications and of several award-winning papers. He is the recipient of the EURASIP Early Career Award 2018. Giuseppe serves/has served as Associate Editor for IEEE Transactions on Circuits and Systems for Video Technology, IEEE Transactions on Image Processing, Elsevier Signal Processing: Image communication. He is an elected member of the MMSP and IVMSP technical committees of the IEEE Signal Processing Society for the term 2018-2023, as well as a member of the Technical Area Committee on Visual Information Processing of EURASIP. Giuseppe gave a tutorial on High Dynamic Range Video at ICIP 2016.

Dong Tian (M'09--SM'16) is currently a Senior Principal Engineer / Research Manager with InterDigital, Princeton, NJ, USA. He worked as a Senior Principal Research Scientist at MERL, Cambridge, MA, USA from 2010-2018, a Senior Researcher with Thomson Corporate Research, Princeton, NJ, USA, from 2006-2010, and a Researcher at Tampere University of Technology from 2002-2005. His research interests include image processing, 3D video, point cloud processing, graph signal processing, and deep learning. He has been actively contributing in both academic and industrial communities. He has 60+ academic publications on top-tier journals/transactions and conferences. He also holds 40+ US-granted patents. Dr. Tian serves as an AE of TIP (2018-2021), General Co-Chair of MMSP'20, MMSP’21, TPC chair of MMSP'19, etc. He is a current elected TC member of IEEE MMSP, MSA and IDSP. He is a senior member of IEEE. Dong Tian received the B.S. and M.Sc degrees from University of Science and Technology of China, Hefei, China, in 1995 and 1998, and the Ph.D. degree from Beijing University of Technology, Beijing, in 2001.

Multi-Layer Perceptrons (MLPs), and their derivatives, Convolutional Neural Networks (CNNs) have a common drawback: they employ a homogenous network structure with an identical “linear” neuron model. This naturally makes them only a crude model of the biological neurons or mammalian neural systems, which are heterogeneous and composed of highly diverse neuron types with distinct biochemical and electrophysiological properties. With such crude models, conventional homogenous networks can learn sufficiently well problems with a monotonous, relatively simple, and linearly separable solution space but they fail to accomplish this whenever the solution space is highly nonlinear and complex. To address this drawback, a heterogeneous and dense network model, Generalized Operational Perceptrons (GOPs) has recently been proposed. GOPs aim to model biological neurons with distinct synaptic connections. GOPs have demonstrated a superior diversity, encountered in biological neural networks, which resulted in an elegant performance level on numerous challenging problems where conventional MLPs entirely failed. Following GOPs footsteps, a heterogeneous and non-linear network model, called Operational Neural Network (ONN), has recently been proposed as a superset of CNNs. ONNs, like their predecessor GOPs, boost the diversity to learn highly complex and multi-modal functions or spaces with minimal network complexity and training data. However, ONNs also exhibit certain drawbacks such as strict dependability to the operators in the operator set library, the mandatory search for the best operator set for each layer/neuron, and the need for setting (fixing) the operator sets of the output layer neuron(s) in advance. Self-organized ONNs (Self-ONNs) with generative neurons can address all these drawbacks without any prior search or training and with elegant computational complexity. However, generative neurons still perform “localized” kernel operations and hence the kernel size of a neuron at a particular layer solely determines the capacity of the receptive fields and the amount of information gathered from the previous layer. In order to improve the receptive field size and to even to find the best possible location for each kernel, non-localized kernel operations for Self-ONNs are embedded in a novel and superior neuron model than the generative neurons hence called the “super (generative) neurons”. This tutorial will cover a natural evolution of the artificial neuron and network models starting from the ancient (linear) neuron model in the 1940s to the super neurons and new-generation Self-ONNs. The focus will particularly be drawn on numerous Image and Video Processing applications such as image restoration, denoising, super-resolution, classification, and regression where Self-ONNs especially with super neurons have achieved state-of-the-art performance levels with a significant gap.

Artificial Neural Networks are the state-of-the-art machine learning paradigm, boosting the performance on almost all application scenarios tested till today. The topic of the proposed tutorial will provide the following:

Serkan Kiranyaz (SM’13) is a Professor at Qatar University, Doha, Qatar. He published 2 books, 5 book chapters, 4 patents, more than 75 journal papers in high impact journals, and more than 100 papers in international conferences. He made contributions to evolutionary optimization, machine learning, bio-signal analysis, computer vision with applications to recognition, classification, and signal processing. Prof. Kiranyaz has co-authored the papers which have nominated or received the “Best Paper Award” in ICIP 2013, ICPR 2014, ICIP 2015, and IEEE TSP 2018. He had the most popular articles in the years 2010 and 2016, and the most-cited article in 2018 in IEEE Transactions on Biomedical Engineering. During 2010-2015 he authored the 4th most-cited article of the Neural Networks journal. His research team has won the 2nd and 1st places in PhysioNet Grand Challenges 2016 and 2017, among 48 and 75 international teams, respectively.

Alexandros Iosifidis is an Associate Professor at the Department of Electrical and Computer Engineering, Aarhus University, Denmark, where he leads the of Machine Learning and Computational Intelligence group. He is a Senior Member of IEEE and served as an Officer of the Finnish IEEE Signal Processing/Circuits and Systems Chapter from 2016 to 2018. He is currently a member of the Technical Area Committee on Visual Information Processing of the European Association for Signal Processing, and he leads the Machine Intelligence research area of the Centre for Digitalisation, Big Data and Data Analytics at Aarhus University. He serves as Associate Editor in Chief for the Neurocomputing journal, as an Associate Editor for the IEEE Access, and Frontiers on Signal Processing journals, as an Area Editor for the Signal Processing: Image Communication journal, and as an Academic Editor for the BMC Bioinformatics journal. He served as Area Chair in several international conferences, including the IEEE ICIP 2018-2021. His research focuses on topics of Statistical Machine Learning, Deep Learning, Computer Vision and Financial Engineering. He has (co-)authored 79 articles in international journals, 100 conference papers, 6 conference abstracts, 4 book chapters, and one patent in topics of his expertise.

Dat Thanh Tran received the B.A.Sc. degree in Automation Engineering from Hameen University of Applied Sciences, and M.Sc. degree in Data Engineering and Machine Learning from Tampere University in 2017 and 2019 respectively. He is now working as a Doctoral Researcher in Signal Analysis and Machine Intelligence Group led by Professor Moncef Gabbouj at Tampere University. His research interests include statistical pattern recognition and machine learning, especially machine learning models with efficient computation and novel neural architectures, finding applications in computer vision as well as financial time-series analysis.

Junaid Malik received his Bachelor's degree in Electrical Engineering from National University of Science and Technology, Pakistan in 2013. He completed his MSc in Information Technology with majors in Signal Processing from Tampere University of Technology in 2017. He is currently pursuing a PhD degree from Tampere University, Finland and works in SAMI research group as a Doctoral Researcher. His research interests include salient object segmentation, discriminative learning-based image restoration and operational neural networks.

Moncef Gabbouj (F’11) is a well-established world expert in the field of image processing and held the prestigious post of Academy of Finland Professor during 2011-2015. He has been leading the Multimedia Research Group for nearly 25 years and managed successfully a large number of projects over 18M Euro. He has supervised 45 PhD theses and over 50 MSc theses. He is the author of several books and over 700 papers. His research interests include Big Data analytics, multimedia content-based analysis, indexing and retrieval, artificial intelligence, machine learning, pattern recognition, nonlinear signal and image processing and analysis, voice conversion, and video processing and coding. Dr. Gabbouj is a Fellow of the IEEE and a member of the Academia Europaea and the Finnish Academy of Science and Letters. He is the past Chairman of the IEEE CAS TC on DSP and committee member of the IEEE Fourier Award for Signal Processing. He served as associate editor and guest editor of many IEEE, and international journals. He was also the General Chair of IEEE ICIP 2020 and IEEE ISCAS 2019.

One of the primary goals of computer vision is understanding of visual scenes. Object detection and segmentation are fundamental problems in computer vision which enable many applications, including autonomous driving, augmented reality, medical image analysis, etc.

This tutorial covers recent progress of object detection and segmentation in both 2D and 3D modalities. We will cover in detail the most recent work on object detection and segmentation. Our goal is to show existing connections between the techniques specialized for different input modalities and relevant tasks, and provide some insights about future work resolving current challenges.

In conjunction with the tutorial we are open-sourcing several new 2D and 3D object detection and segmentation systems. We hope that such pairing will help researchers who are interested primarily in visual detection and segmentation to build and benchmark their systems easier.

Chunhua Shen is a Professor at The University of Adelaide, Australia. He is also an adjunct Professor of Data Science and AI at Monash University. He is a Project Leader and Chief Investigator with the Australian Research Council Centre of Excellence for Robotic Vision (ACRV). His research interests include intersection of computer vision and statistical machine learning. Recent work has been on large-scale image retrieval and classification, object detection and pixel labelling using deep learning. He received the Australian Research Council Future Fellowship in 2012.

Tao Kong is a research scientist at Bytedance AI Lab. Before that, he received his Ph.D. degree of computer science in Tsinghua University, under the supervision of Prof. Fuchun Sun. His Ph.D dissertation was awarded the 2020 Excellent Doctoral Dissertation Nomination Award of CAAI. From Oct 2018 to Mar 2019, he visited Grasp Lab in University of Pennsylvania, supervised by Prof. Jianbo Shi. His research interests are computer vision and machine learning, especially high-level visual understanding.

Shaoshuai Shi is a PhD student at the Multimedia Lab (MMLab) of The Chinese University of Hong Kong, supervised by Prof. Xiaogang Wang and Prof. Hongsheng Li. He has been awarded with the Hong Kong PhD Fellowship and Google PhD Fellowship. His research focuses on the 3D scene understanding with point cloud representation, especially 3D object detection from point clouds on autonomous driving scenario. His works (PointRCNN, Part-A2-Net and PV-RCNN, etc.) continually push the 3D object detection forward with diverse strategies, and his open-source 3D detection toolbox OpenPCDet greatly benefits the 3D detection community by supporting extensive methods within one framework.

Deep learning has been widely used in modern machine learning problems such as computer vision and image processing applications due to its high performance. Yet, as the network sizes become larger, deep learning solutions become more expensive in memory and computational requirements. The backpropagation-centric network optimization technique is computationally inefficient. As sustainability is becoming an imperative, it is urgent to explore an alternative green learning technology, which is competitive with deep learning in performance yet with significant less computation and a much smaller model size. To this end, the successive subspace learning (SSL) methodology provides an attractive alternative. The Media Communications Lab (MCL) at the University of Southern California (USC) has been devoted to this objective since 2015. A sequence of papers that focus on light-weight machine learning systems has been published. In this tutorial, I will present an overview of this emerging research area and point out future research directions.

Dr. C.-C. Jay Kuo received his Ph.D. degree from the Massachusetts Institute of Technology in 1987. He is now with the University of Southern California (USC) as Director of the Media Communications Laboratory and Distinguished Professor of Electrical Engineering and Computer Science. His research interests are in the areas of media processing, compression and understanding. Dr. Kuo was the Editor-in-Chief for the IEEE Trans. on Information Forensics and Security in 2012-2014. Dr. Kuo is a Fellow of AAAS, IEEE and SPIE. He has guided 156 students to their Ph.D. degrees and supervised 30 postdoctoral research fellows. Dr. Kuo is a co-author of 300 journal papers, 950 conference papers and 14 books. Dr. Kuo received the 2017 IEEE Leon K. Kirchmayer Graduate Teaching Award, the 2019 IEEE Computer Society Edward J. McCluskey Technical Achievement Award, the 2019 IEEE Signal Processing Society Claude Shannon-Harry Nyquist Technical Achievement Award and the 2020 IEEE TCMC Impact Award.

The latest video coding standard VVC (Versatile Video Coding) jointly developed by ITU-T and ISO/IEC has been finalized in July 2020 and in September 2020 Fraunhofer HHI has made the optimized VVC software encoder (VVenC) and VVC software decoder (VVdeC) implementations publicly available on GitHub. Some key application areas for the use of VVC particularly include ultra-high-definition video (e.g. 4K or 8K resolution), video with a high dynamic range and wide color gamut (e.g., with transfer characteristics specified in Rec. ITU-R BT.2100), and video for immersive media applications such as 360° omnidirectional video, in addition to the applications that have commonly been addressed by prior video coding standards. Important design criteria for VVC have been low computational complexity on the decoder side and friendliness for parallelization on various algorithmic levels.

This tutorial details the open encoder implementation VVenC with a specific focus on the challenges and opportunities in implementing the myriad of new coding tools. This includes algorithmic optimizations for specific coding tools such as block partitioning, motion estimation as well as implementation specific optimizations such as SIMD vectorization and parallelization approaches. Methods to approximate and increase subjectively perceived quality based on a novel block-based XPSNR model and the quantization parameter adaptation algorithm in VVenC will be discussed. Additionally, topics like rate-control, error-propagation analysis and video coding in general in a context of modern video codecs will be discussed based on real-world examples of problems encountered during codec development.

The video coding community has traditionally been driven by industrial as well as academic research activities. Our tutorial proposal strives to provide a comprehensive overview of a publicly available optimized implementation of the most recent VVC video coding standard, including in-depth discussion of implemented algorithms and approaches. It is believed to be of highest relevance for researchers in the area of video coding, video processing systems, implementation and related fields.

Benjamin Bross received the Dipl.-Ing. degree in electrical engineering from RWTH Aachen University, Aachen, Germany, in 2008.

In 2009, he joined the Fraunhofer Institute for Telecommunications – Heinrich Hertz Institute, Berlin, Germany, where he is currently heading the Video Coding Systems group at the Video Coding & Analytics Department, Berlin and a part-time lecturer at the HTW University of Applied Sciences Berlin. Since 2010, Benjamin is very actively involved in the ITU-T VCEG | ISO/IEC MPEG video coding standardization processes as a technical contributor, coordinator of core experiments and chief editor of the High Efficiency Video Coding (HEVC) standard [ITU-T H.265 | ISO/IEC 23008-2] and the emerging Versatile Video Coding (VVC) standard. In addition to his involvement in standardization, Benjamin is coordinating standard-compliant software implementation activities. This included the development of an HEVC encoder that is currently deployed in broadcast for HD and UHD TV channels as well as the optimized and open VVenC / VVdeC software implementations of VVC.

Besides giving talks about recent video coding technologies, Benjamin Bross is an author or co-author of several fundamental HEVC and VVC-related publications, and an author of two book chapters on HEVC and Inter-Picture Prediction Techniques in HEVC. He received the IEEE Best Paper Award at the 2013 IEEE International Conference on Consumer Electronics – Berlin in 2013, the SMPTE Journal Certificate of Merit in 2014 and an Emmy Award at the 69th Engineering Emmy Awards in 2017 as part of the Joint Collaborative Team on Video Coding for its development of HEVC.

Christian R. Helmrich received the B. Sc. degree in Computer Science from Capitol Technology University (formerly Capitol College), Laurel, MD in 2005 and the M. Sc. degree in Information and Media Technolo¬gies from the Technical University of Hamburg-Harburg, Germany, in 2008. Between 2008 and 2013 he worked on numerous speech and audio coding solutions at the Fraunhofer Institute for Integrated Circuits (IIS), Erlangen, Germany, partly as a Senior Engineer. From 2013 until 2016 Mr. Helmrich continued his work as a research assistant at the International Audio Laboratories Erlangen, a joint institution of Fraunhofer IIS and the University of Erlangen-Nuremberg, Germany, where he completed his Dr.-Ing. degree with a dissertation on audio signal analysis and coding. In 2016 Dr. Helmrich joined the Video Coding & Analytics Department of the Fraunhofer Heinrich Hertz Institute, Berlin, Germany, as a next-generation video coding researcher and developer. His main interests include audio and video acquisition, coding, storage, and preservation as well as restoration from analog sources.

Dr. Helmrich participated in and contributed to several standardization activities in the fields of audio, speech, and video coding, most recently the 3GPP EVS standard (3GPP TS 26.441), the MPEG-H 3D Audio standard (ISO/IEC 23008-3), and the MPEG-I/ITU-T VVC standard (ISO/IEC 23090-3 and ITU-T H.266). He is author or co-author of more than 40 scientific publications and a Senior Member of the IEEE.

Adam Wieckowski received the M.Sc. degree in computer engineering from the Technical University of Berlin, Berlin, Germany, in 2014. In 2016, he joined the Fraunhofer Institute for Telecommunications, Heinrich Hertz Institute, Berlin, as a Research Assistant.

He worked on the development of the software, which later became the test model for VVC development. He contributed several technical contributions during the standardization of VVC. Since 2019, he has been a Project Manager coordinating the technical development of VVdeC and VVenC decoder and encoder solutions for the VVC standard.